ASIMOV’S 3 LAWS OF ROBOTICS & THE QUESTION OF AI ETHICS

Science fiction before Asimov was filled with dangerous killer robots.

Mary Shelley as far back as 1818 dared to question the creation of an artificial being with some form of intelligence in her novel ‘Frankenstein’.

The simple premise is that any halfway intelligent creation of mankind would be flawed. This means such a creation can get jealous of humanity and inevitably turn on its creator. So, what is a counter to such behaviour?

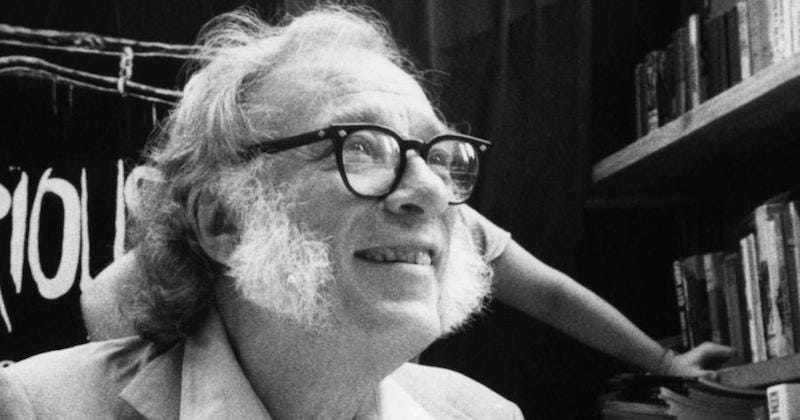

Meet Asimov

Best known for the Foundation series and robot stories, Isaac Asimov is regarded as the ‘Father of Science Fiction’.

Asimov invented the word ‘robotics’ as part of a short story in 1942. He is credited to have had a massive impact on framing how people think about the development of artificial intelligence and the field of robotics itself.

The 3 Laws of Robotics

Asimov created 3 laws of robotics to counter the Frankenstein legend and the idea of dangerous robots that go astray. The 3 laws of robotics are as follows:

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

A robot must obey orders given to it by human beings except where such orders would conflict with the First Law.

A robot must protect its own existence, as long as such protection does not conflict with the First or Second Law.

Asimov used his Three Laws to create a world in which robots were reliable, beneficent, and in line with the purpose for which they were conceived. He later added the “Zeroth Law,” above all the others:

A robot may not harm humanity, or, by inaction, allow humanity to come to harm.

A Question of Ethics

Asimov's Three Laws of Robotics were designed to address the fears surrounding autonomous machines. It is safe to think that laws provide an ethical framework or a foundation to ensure that robots remain subservient and beneficial. The fundamental reasoning here is to prevent harm to humans and maintain control over artificial intelligence.

How possible is it for AI to absorb moral codes?

Artificial intelligent beings should act best interests of humanity collectively. Asimov's thoughts reflect an advanced ethical consideration, suggesting that robots could be entrusted with higher-order decision-making aimed at the long-term survival and prosperity of humanity. The catch there is to provide a non-corrosive guide for them to follow.

The implications of Asimov's laws for the future development of AI are profound. Consider this:

· If AI systems are developed and governed by similar ethical principles, it could mitigate the risks of AI behaving unpredictably or maliciously.

The challenge lies with the practical implementation of laws created to tame AI. What is the possibility of programming nuanced ethical decision-making into AI and ensuring that robots interpret and apply these laws correctly in complex real-world scenarios?

The European Union AI Act is proof that lawmakers have their attention on AI and it will not stop there. As AI continues to evolve, ongoing ethical considerations and robust regulatory frameworks will be crucial to harness its benefits while safeguarding against potential threats. There is another place that lawmakers in all climes accepting AI need to look at extensively.

It is time for humanity to focus more on wrestling with the ethics of the people behind the machines. The minds behind the creation of artificial intelligence need proper orientation on acceptable measures of moral codes to program into AI models or algorithmic learning machines.

It is safe to advocate for codes of ethics that will be embedded in AI programs. We should ask:

Where is the code of ethics for what gets built and what doesn’t?

To what would a young AI programmer or creator turn to?

Who gets to use these sophisticated systems and who doesn’t?

What qualifications should AI creators have in terms of ethics and regulatory capacity?

The need for AI ethicists now that we are starting is evident.